Donghyun Son

I’m currently looking for Ph.D. opportunities that are aligned with my interests. Feel free to reach me!

I am an undergraduate researcher majoring in Computer Science and Engineering at Seoul National University, working on efficient machine learning.

I aim to build an intelligent system that delivers strong problem-solving capabilities at low-cost. I believe efficiency is key to achieving the goal, and focus on two directions: lowering the inference cost of large models, and improving training efficiency. My current interests include:

- efficient long-context inference

- data- and compute-efficient training of large models

- system-level optimization of ML workloads

I have worked on making large language models efficient, including KV cache quantization (NeurIPS 2025, with Prof. Sungjoo Yoo) and systems for agentic workflows (under review, with Dr. Saurabh Agarwal and Prof. Aditya Akella).

Aside from research, I enjoy algorithmic problem solving and have competed in competitive programming contests. You can find me on codeforces and BOJ.

Links: Github | CV | Google Scholar | X

Selected Publications

- NeurIPS 2025

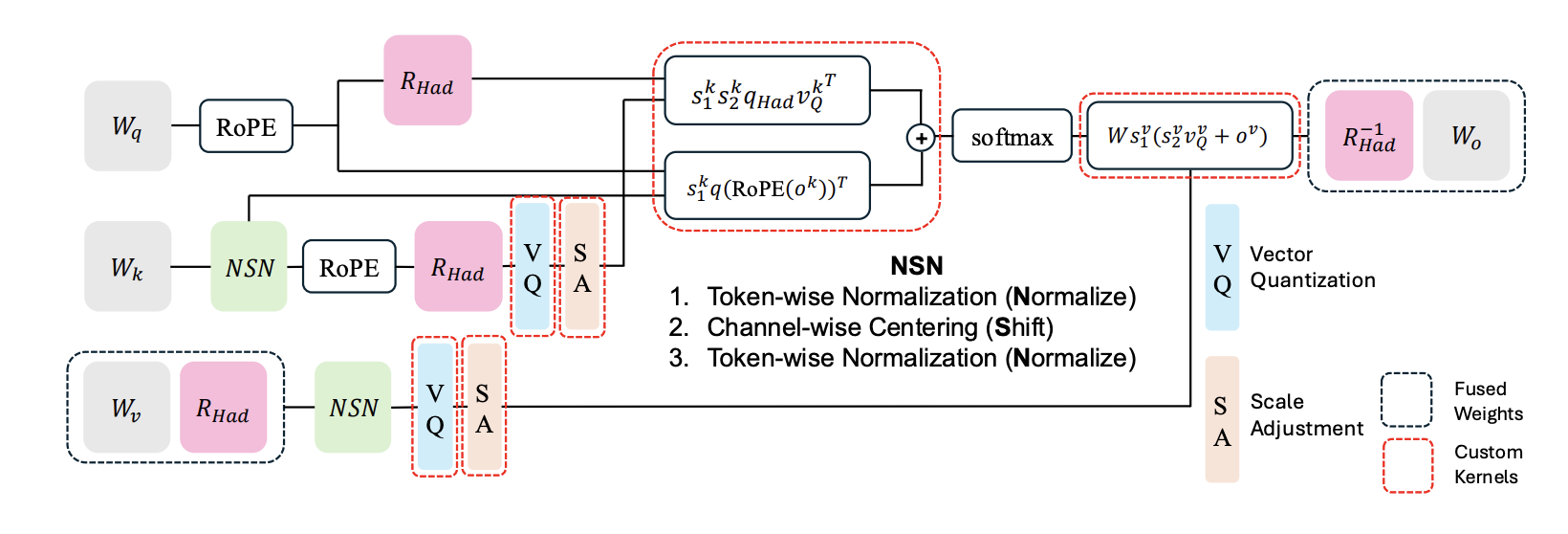

NSNQuant: A Double Normalization Approach for Calibration-Free Low-Bit Vector Quantization of KV CacheIn Advances in Neural Information Processing Systems (NeurIPS), 2025

NSNQuant: A Double Normalization Approach for Calibration-Free Low-Bit Vector Quantization of KV CacheIn Advances in Neural Information Processing Systems (NeurIPS), 2025 - WSDM 2023 (Oral)

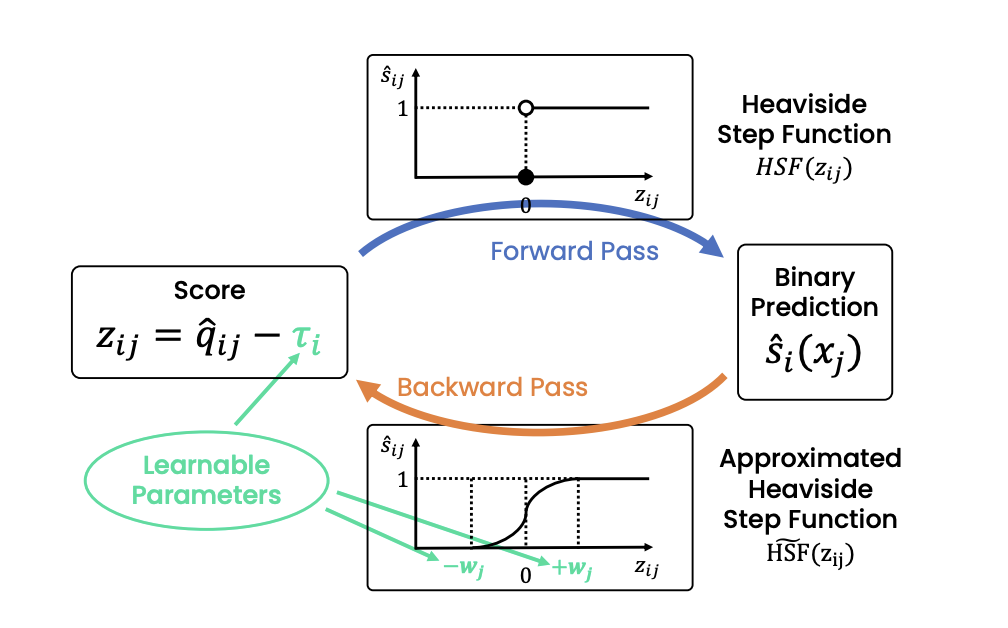

Reliable decision from multiple subtasks through threshold optimization: Content moderation in the wildIn Proceedings of the Sixteenth ACM International Conference on Web Search and Data Mining (WSDM), 2023

Reliable decision from multiple subtasks through threshold optimization: Content moderation in the wildIn Proceedings of the Sixteenth ACM International Conference on Web Search and Data Mining (WSDM), 2023 - OOD-CV@ICCV23

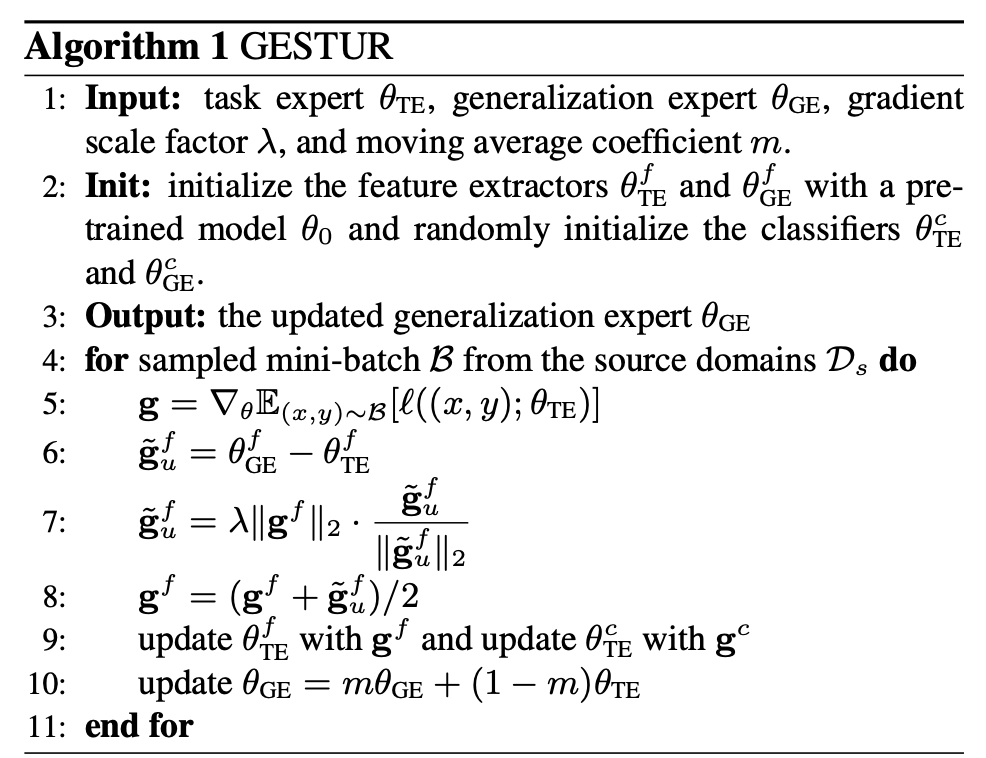

Gradient estimation for unseen domain risk minimization with pre-trained modelsIn Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), 2023

Gradient estimation for unseen domain risk minimization with pre-trained modelsIn Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), 2023